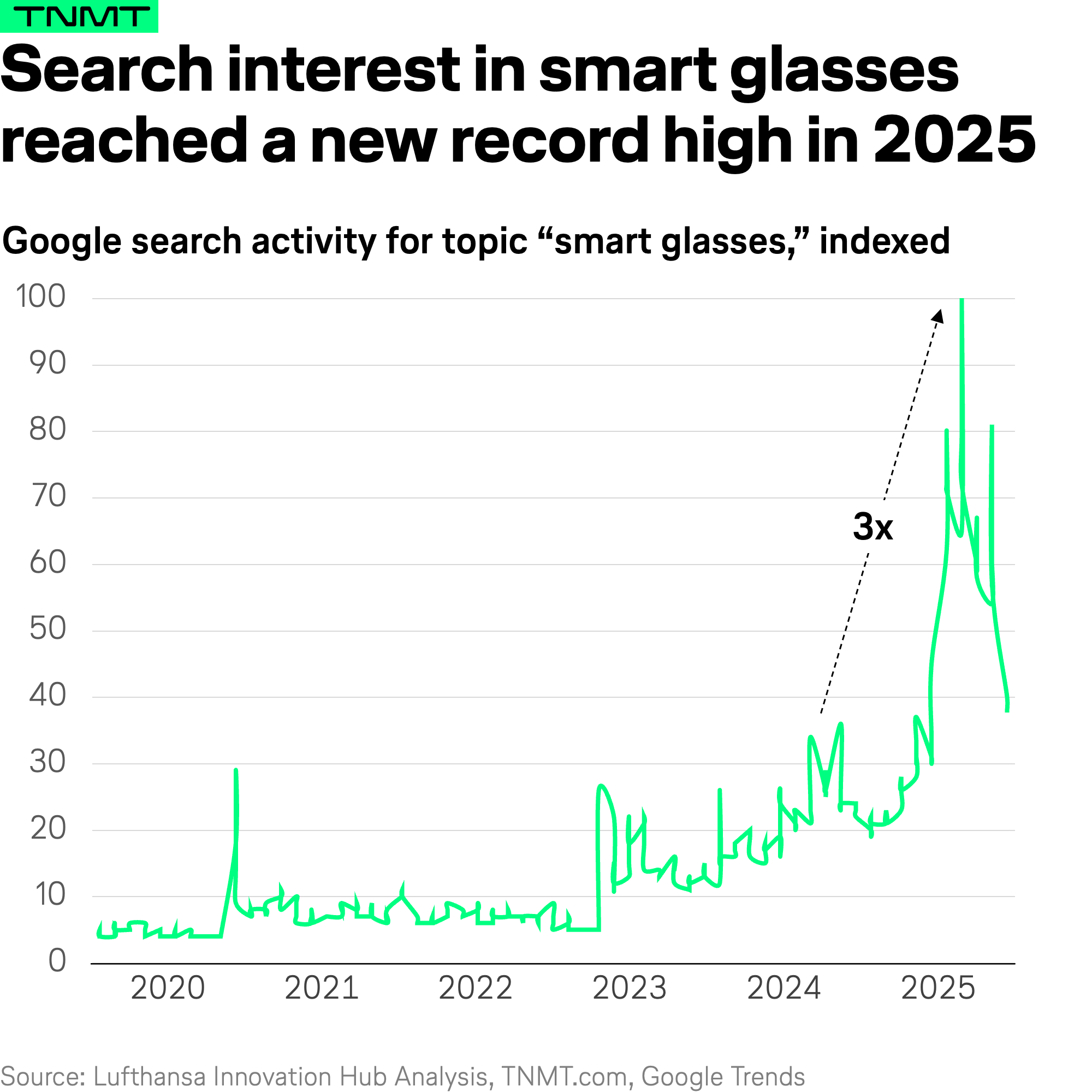

If we look back at the key technology trend that defined 2025 (not just in travel, but across the entire tech landscape), it’s undoubtedly AI.

But there’s a risk that all this AI noise makes us overlook a few other technologies that are quietly making real progress.

One of them is a theme that, for many, felt overhyped the first time around: smart glasses and mixed reality.

Quick reminder:

- When Apple launched the Apple Vision Pro in early 2024, many analysts declared it “the next computing interface” and a potential smartphone replacement.

- That didn’t quite play out. Sales disappointed, expectations cooled, and Apple recently scaled back production.

But here’s the important nuance: while heavy, expensive XR headsets struggled, a lighter, more pragmatic class of smart glasses has been gaining traction.

And in 2025, the chatter around them heated up again massively.

So where is that renewed momentum coming from?

From a series of concrete moves by some of the biggest players in tech:

Google kicked things off with Android XR in late 2024 and used its 2025 developer conference to show how AI-powered, voice-first interfaces (via Gemini) can run on smart glasses.

The demos focused on real-world use cases like navigation, contextual information, and product support (not sci-fi fantasies).

Meta already had a solid run with its Ray-Ban smart glasses, combining cameras with an AI assistant.

- In September 2025, Meta doubled down: a second-generation model and a Ray-Ban version with lightweight XR displays, navigated via a neural-band bracelet that translates muscle signals into inputs.

- Price point: just under $800, so a very different bet than the $3,500 Vision Pro.

Outside the consumer world, Amazon is investing in smart delivery glasses for its logistics workforce, helping drivers spot hazards, navigate to doorsteps, and improve last-mile delivery efficiency. No hype. Pure productivity.

And finally, incumbents are circling again.

Samsung recently released new XR hardware that echoes Apple’s early Vision Pro direction, while Apple itself is rumored to be working on lighter AR glasses, designed as an accessory to existing devices like the iPhone, which seems like a far more pragmatic approach given the mixed reception of its Vision Pros.

Taken together, all these signals suggest something important: smart glasses aren’t dead; they’re being rebooted. Not as a smartphone replacement, but as a contextual, AI-powered layer that augments what we already do.

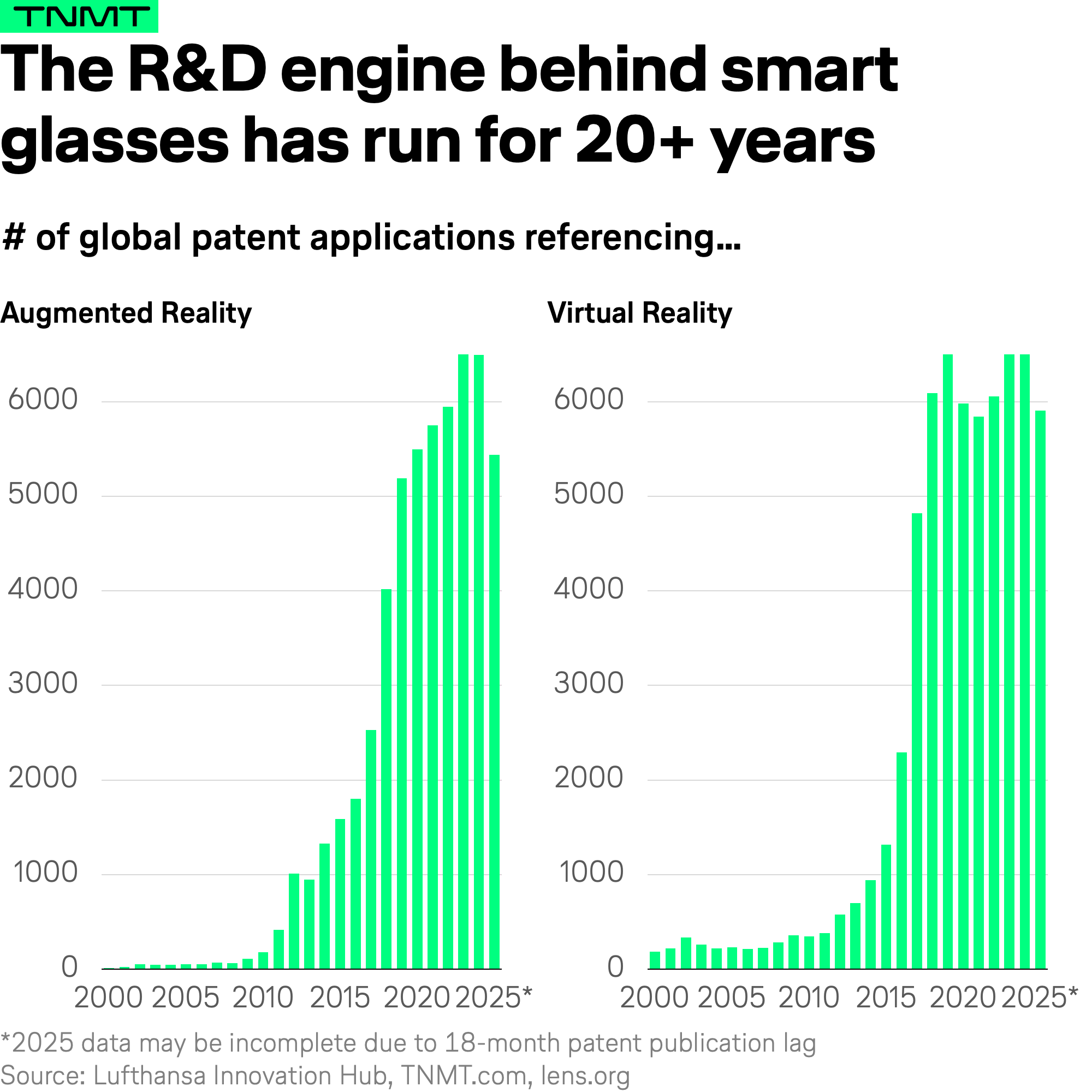

An additional look at patent activity reinforces this view.

Patent filings related to immersive technologies such as VR (Virtual Reality) and AR (Augmented Reality) have grown steadily over the past decade and reached a consistently high level in the early 2020s, with no signs of slowing down since.

While consumer sentiment around products like the Vision Pro may have cooled, R&D activity in smart glasses and immersive interfaces clearly hasn’t.

The industry kept building.

And that’s where things get especially interesting for Travel and Mobility Tech.

- Travel is one of the most visual industries out there.

- It is also one of the most context-heavy and complex sectors.

- Travelers constantly need guidance, reassurance, and real-time information across unfamiliar environments: airports, train stations, cities, hotels, and attractions.

AR and VR are uniquely suited to bridge that gap.

They can overlay navigation, translate environments, explain what you’re seeing, and guide you through multi-step journeys (in the moment, in context, and without pulling you out of the experience).

Our hypothesis: if smart glasses find their killer use case, travel might just be it.

And there’s another important shift that makes this especially relevant now.

Earlier generations of immersive hardware (like the Apple Vision Pro or the Meta Quest) were built technology-first and wearability-second. Powerful, yes. But not exactly designed for everyday use, comfort, or long stretches of real-world movement.

The latest wave of smart glasses flips that equation.

Today’s devices prioritize wearability first, technology second, and that changes everything. Case in point: the Meta Ray-Ban smart glasses, developed in collaboration with eyewear giant EssilorLuxottica (the parent company of Ray-Ban and Oakley).

The result?

- The best-selling smart glasses worldwide.

- The partnership worked so well that Meta even acquired a minority stake in EssilorLuxottica in July 2025.

The other major development is that Meta has begun rolling out preview access to its Meta Wearables Device Access Toolkit, allowing software developers to experiment with using audio/visual input from the glasses to augment mobile apps. After years of being a closed system, a public release to developers everywhere now seems imminent.

The key takeaway:

- Smart glasses are no longer trying to replace your phone or laptop.

- They’re trying to disappear into everyday life, which is exactly what travel needs.

- Widespread experimentation and prototyping of use cases are coming.

But enough hardware context.

Let’s look at what this actually means for travel based on use cases we already see in practice today.

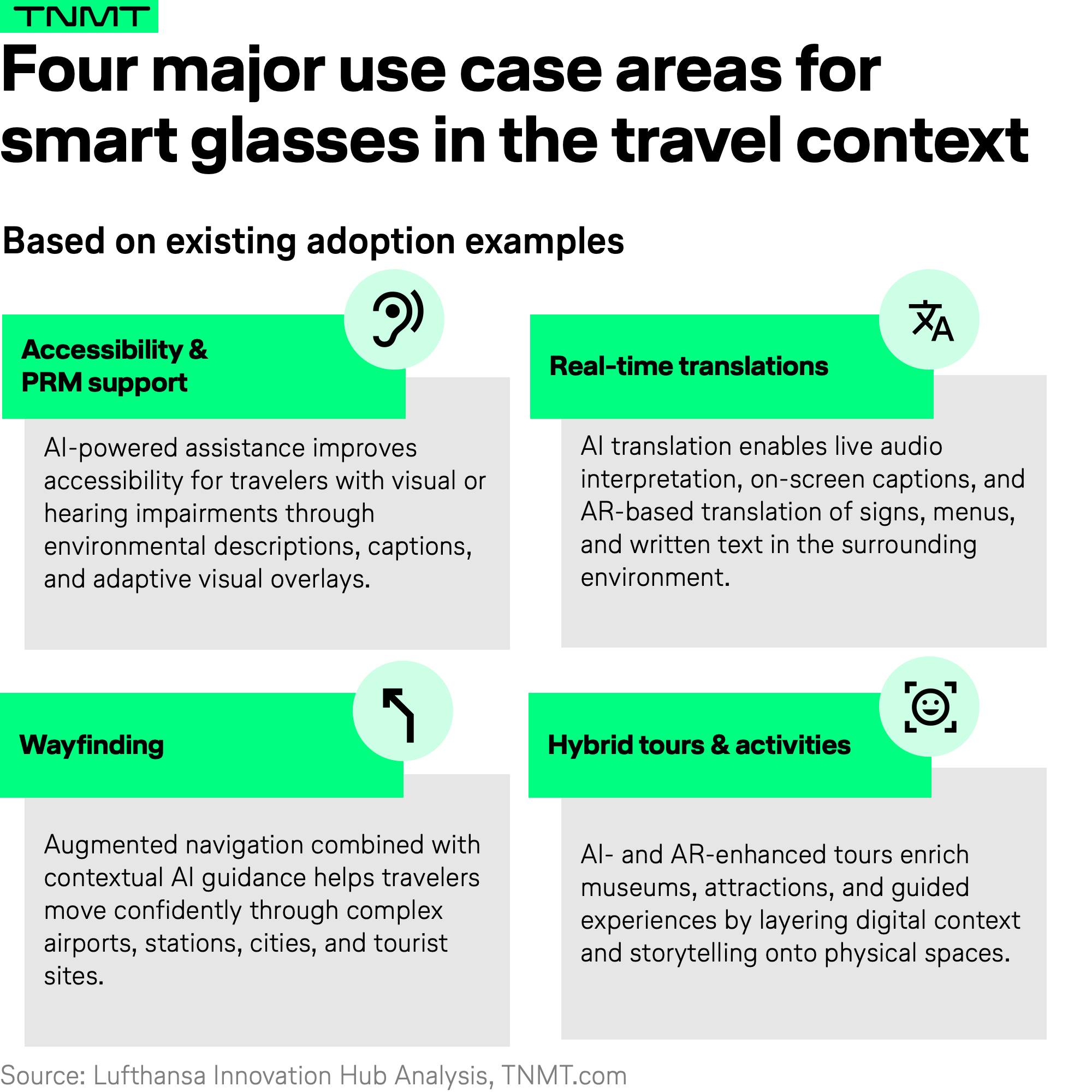

Drawing from existing deployments and early adoption, we see four major smart-glasses use case areas emerging across the travel journey.

Let’s go through each of these use cases one by one to make them more concrete and applicable.

1. Making travel accessible by default

Smart glasses may not replace smartphones, but they could fundamentally expand who can travel independently, and how.

For blind and low-vision travelers, AI-powered smart glasses equipped with cameras can translate visual surroundings into real-time audio guidance. Examples include:

- Navigation cues like “you’ve just passed gate 67 and are approaching gate 68 on your right.”

- Reading signage aloud (“your boarding pass says seat 5C”)

- Or identifying objects and obstacles in unfamiliar environments like airports or train stations.

A standout example is Meta Ray-Ban’s collaboration with Be My Eyes, which allows users to connect instantly to a human volunteer who sees the live camera feed and helps with specific tasks.

Other specialized players, such as Envision, offer similar functionality with AI-first assistants that describe the environment continuously, without the need to pull out a phone.

For travelers with hearing impairments, smart glasses open another powerful door. Augmented reality layers can live-caption conversations and ambient audio, turning spoken communication into text directly in the wearer’s field of view.

- Solutions like XRAI Glass specialize in this capability and have already launched pilots at airports such as Dallas–Fort Worth, where live captioning supports boarding announcements and gate changes.

- Others, like SignGlasses, combine cameras with on-demand access to certified sign-language interpreters, making guided tours, attractions, or museum visits accessible without separate devices or special arrangements.

Then there’s color-vision deficiency, an often overlooked but widespread challenge.

- Around 8% of men and 0.4% of women in European populations are affected by red-green color blindness, which can make color-coded maps, signage, transit systems, and even museum exhibits difficult to interpret.

- Smart glasses with color-adjustment filters can enhance contrast and distinguish hues more clearly.

- EnChroma, a leading player in this space, already partners with museums, parks, and attractions to offer smart glasses as an additional service for visitors, helping ensure experiences aren’t lost due to color-based design choices.

Beyond these cases, research is also exploring how smart glasses could support travelers with cognitive impairments such as aphasia, dementia, or memory loss.

While this area is still early, studies point toward similar building blocks: AI assistants, contextual prompts, and the ability to call human support that sees the user’s camera feed. Much of this work overlaps with medical and rehabilitation research, but the travel implications are obvious.

In short: accessibility may turn out to be one of the clearest near-term killer use cases for smart glasses in travel. Not because the technology is flashy, but because it quietly removes barriers that have existed for decades.

2. When language barriers quietly disappear

Language has always been one of the biggest friction points in travel.

And while translation apps have helped, they’ve never quite solved the problem (pulling out a phone mid-conversation or mid-tour is still a break in the experience).

Smart glasses change that dynamic.

- AI-enabled live voice translation can now run directly through smart glasses, either as spoken audio or as augmented captions visible in the wearer’s field of view.

- The result: museum tours, guided city walks, and in-destination interactions suddenly become accessible without language barriers.

This functionality is already available today. Smart-glasses players like Even Realities and Rokid offer real-time captioning and translation across multiple languages (and tourism providers are beginning to adopt it).

One standout example comes from Paris.

- At the Comédie-Française, one of the world’s most renowned theaters, visitors can use smart glasses developed in collaboration with Panthea.

- These glasses provide real-time translations in English, French, French Sign Language (LSF), and adapted French for Deaf and hard-of-hearing audiences, making live performances accessible without altering the stage experience.

Similar experiments are happening elsewhere.

At the Holland Festival in the Netherlands, visitors used AI-powered smart glasses developed by Het Nationale Theater and XRAI Glass to convert live spoken dialogue into real-time subtitles in 220+ languages (a scale that would be impossible with traditional human interpretation).

Beyond spoken language, smart glasses are also starting to tackle written text translation through AR overlays. While still less common than captioning, players like HTC (with Vive Eagle) and Meta’s Ray-Ban Display already support translating signage, menus, and printed guidance directly in the wearer’s line of sight. For travelers, this means understanding local environments instantly without switching apps or guessing.

In a nutshell: translation through smart glasses is about making destinations feel immediately legible and removing one of travel’s oldest sources of friction.

3. Navigation without pulling out your phone

Navigation is one of those travel tasks we all do constantly.

And yet it’s still surprisingly clunky.

Augmented navigation layers already exist today, mostly through mobile apps. Google Maps’ Live View is a good example, offering AR overlays in cities like Paris, New York, or Los Angeles.

But there’s a catch: it only works if you’re holding your phone up in front of you, which is not ideal when you’re dragging luggage, checking boarding passes, or simply trying to enjoy where you are.

Smart glasses promise to fix exactly that.

By moving AR navigation into a hands-free, glanceable display, smart glasses make wayfinding far more seamless. That’s why navigation is a core feature for several current devices, including Even Realities’ G2, Meta’s Ray-Ban Display, and RayNeo’s X3 Pro.

In the travel context, this matters a lot. Whether you’re exploring a new city or navigating complex transportation hubs like airports, train stations, or transit interchanges, maps and directions are mission-critical.

And today, wayfinding is still a major pain point for travelers.

As a result, several airports have already experimented with AR-based wayfinding, including Zurich Airport (with Google Live View), Gatwick Airport, and specialist providers like GoodMaps, which focuses specifically on indoor terminal navigation.

The limitation so far isn’t the technology; it’s the interface.

- Smartphone-based (aka: handheld) AR still competes with luggage, documents, and basic situational awareness.

- Smart glasses remove that friction by embedding guidance directly into the traveler’s field of view.

Importantly, navigation doesn’t always need to be visual.

- Modern smart glasses combine AR with contextual AI assistance, allowing travelers to switch between visual cues and audio-only guidance.

- In environments where overlays might distract (think historic city centers, architectural landmarks, or cultural sites), spoken AI guidance can be the more elegant option.

- Meta’s Ray-Ban AI glasses, for instance, can answer questions about landmarks or directions without displaying anything at all, almost like a personal travel assistant.

4. Upgrading destinations, not just devices

If there’s one area where smart glasses can massively elevate the travel experience, it’s destinations and attractions.

This is where travel moves beyond getting from A to B and turns into what people actually travel for: discovering, learning, and being immersed.

Museums, heritage sites, theme parks, and live performances all share the same challenge: how to make experiences richer, more engaging, and more memorable, without overwhelming visitors or forcing them to stare at their phones.

Today, many attractions already experiment with AR to refresh exhibitions and storytelling.

- Typically, this means scanning QR codes with a smartphone to unlock additional content.

- It works, but it breaks immersion.

Smart glasses promise a more natural evolution: hands-free, always-on contextual layers, embedded directly into what visitors are seeing.

Some destinations are already pushing in this direction.

- In 2023, ARtGlass launched a two-hour extended-reality tour at Hegra, a UNESCO World Heritage site in Saudi Arabia, using XR to add historical context and narrative layers to the ancient ruins.

- More recently, Disney announced a collaboration with Meta, exploring how Ray-Ban AI glasses could act as a virtual park guide, answering questions, guiding visitors, and extending storytelling beyond static signage.

At the same time, a broader shift toward hybrid and immersive entertainment is underway.

Venues are experimenting with experiences that blend physical presence and digital immersion at a much deeper level.

- In 2025, the Paris Opera launched La Magie Opéra, a VR experience offered as an add-on to visiting the Palais Garnier.

- In London, the Natural History Museum introduced Vision of Nature, a mixed-reality exhibition imagining the world 100 years from now.

These examples also point to a broader shift.

Smart glasses are likely not the ultimate interface for immersive travel experiences, especially at destinations and attractions.

In the longer run, it’s far more likely that immersion becomes built into the environment itself.

Museums, heritage sites, and venues will increasingly integrate immersive elements into exhibitions, architecture, and staging through spatial audio, projection, mixed-reality rooms, and digitally augmented installations that can seamlessly connect to wearable devices and screens of all types, i.e., the digital ecosystem of services of devices used by visitors of the future.

In that context, today’s isolated smart glasses might act as a bridge technology. They offer a scalable, low-friction way to enrich singular experiences today, while destinations experiment, invest, and learn how to weave immersive storytelling more organically into physical spaces.

Seen this way, smart glasses don’t compete with the future of immersive travel; they prepare it. They help travelers get comfortable with layered experiences, and they help destinations test what works before immersion fully disappears into the environment.

A reality check (for now)

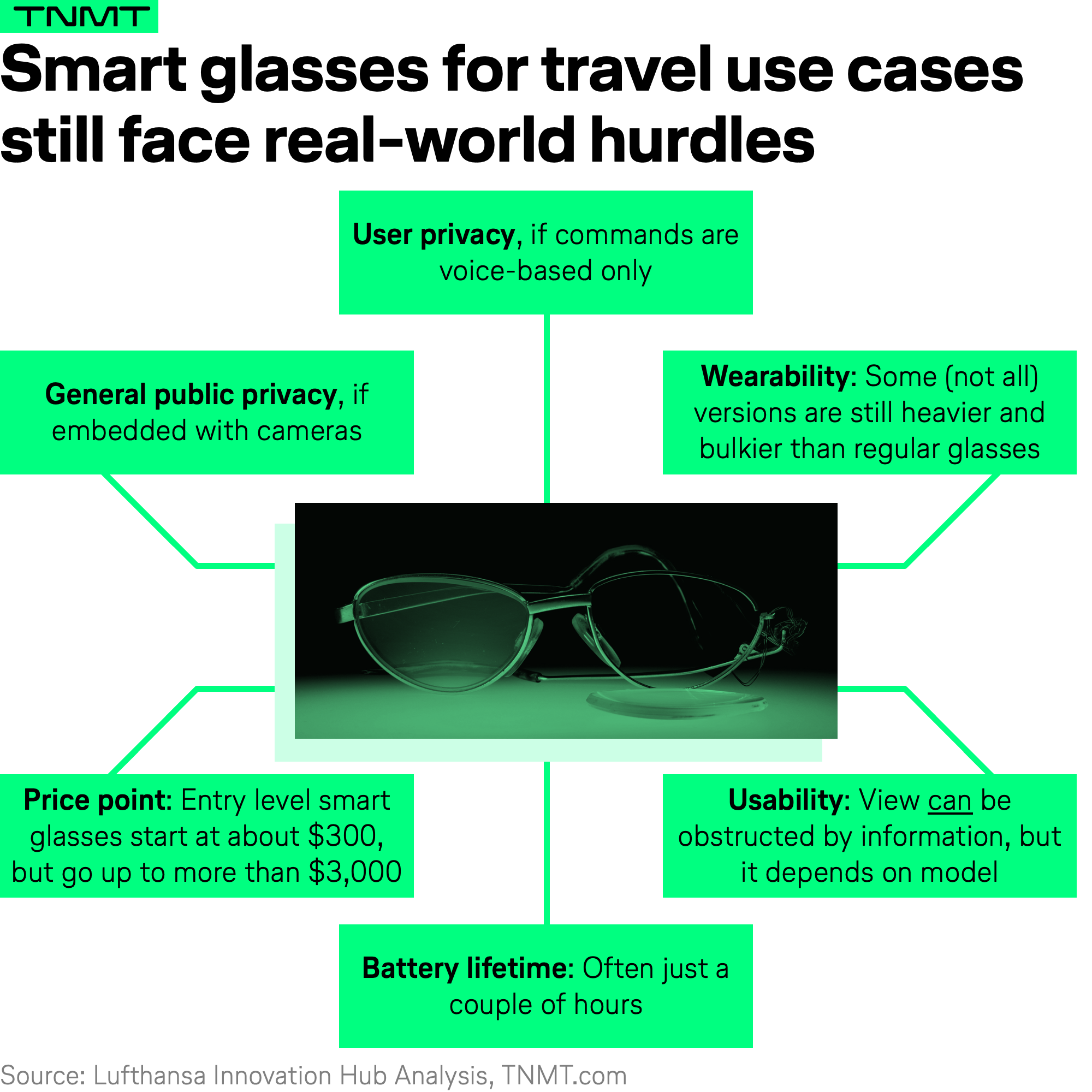

Before we end this article, none of this is to say that smart glasses are already a perfect, ready-to-use travel companion.

In fact, if you read the comment section under Ray-Ban Meta’s own YouTube video titled “Ray-Ban Meta as the Ultimate Travel Assistant,” you’ll quickly notice where today’s limitations still lie.

Users regularly struggle with:

- Audio translation that doesn’t yet work reliably across all languages.

- Core features (including translation) are restricted to the traveler’s home country, which largely breaks the use case for international travel.

- Missing or incomplete wayfinding and Google Maps integration (arguably the most fundamental travel use case of all).

On top of that, there are still open questions around battery life, comfort for all-day use, and, inevitably, privacy and data protection, both from a user and regulatory perspective.

So no: smart glasses haven’t reached their full travel potential yet.

But that’s precisely what makes this moment interesting.

The last few years have shown real technological leaps (in AI, computer vision, voice interfaces, and hardware miniaturization), and many of today’s shortcomings feel less like dead ends and more like engineering problems waiting to be solved.

That’s why, despite all the caveats, we see smart glasses as one of the most promising hardware trends when it comes to making travel meaningfully better.

Not flashier.

Not more futuristic.

Just more intuitive, more inclusive, and more supportive in moments that actually matter.